Next: 3 Condition Functions Up: Implementing Baraff & Witkin's Previous: 1 Introduction

In this paper, I will use the symbol ![]() to denote an identity matrix. The

symbol

to denote an identity matrix. The

symbol ![]() refers to a vector containing the

refers to a vector containing the ![]() th column of the identity

matrix. To

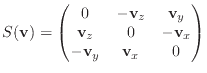

denote the skew-symmetric matrix form of a vector cross product, I will use

the notation

th column of the identity

matrix. To

denote the skew-symmetric matrix form of a vector cross product, I will use

the notation

I will also define the vector

![]() as the transpose of the

as the transpose of the

![]() th row of

th row of

![]() .

. ![]() is a column vector, but represents

a row in the original

is a column vector, but represents

a row in the original ![]() matrix.

I will use a hat (e.g.

matrix.

I will use a hat (e.g.

![]() ) to refer to a normalised vector of unit

length, and omit the hat (e.g.

) to refer to a normalised vector of unit

length, and omit the hat (e.g. ![]() ) for an unnormalised vector. This is

the opposite convention to that used in my original project report.

) for an unnormalised vector. This is

the opposite convention to that used in my original project report.

I will retain Baraff & Witkin's notation and use ![]() to refer to the

positions of points, not

to refer to the

positions of points, not ![]() as in Macri's paper. Furthermore,

I will refer to the points of a triangle as

as in Macri's paper. Furthermore,

I will refer to the points of a triangle as ![]() ,

, ![]() and

and ![]() , and

not use the

, and

not use the ![]() ,

, ![]() and

and ![]() subscripts used by the other two papers. For

the points of an edge, I will likewise use subscripts of 0, 1, 2, and 3 rather

than the

subscripts used by the other two papers. For

the points of an edge, I will likewise use subscripts of 0, 1, 2, and 3 rather

than the ![]() ,

, ![]() ,

, ![]() and

and ![]() subscripts used by Macri.

subscripts used by Macri.

When referring to points, I will use ![]() and

and ![]() to refer to two

possibly distinct points. I will use the subscript

to refer to two

possibly distinct points. I will use the subscript ![]() to refer to the

to the

to refer to the

to the ![]() th component of

th component of ![]() , and likewise the subscript

, and likewise the subscript ![]() for

the

for

the ![]() th component of

th component of ![]() . I will also sometimes use subsubscripts of

. I will also sometimes use subsubscripts of ![]() ,

,

![]() and

and ![]() to refer to the components.

to refer to the components.

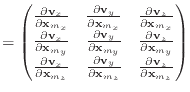

I like to think of the derivatives in this paper in terms of their components. Both Baraff & Witkin and Macri differentiate with respect to a vector in their papers, giving quantities like

|

|

This type of formula is common in some areas of graphics; for example, the

Jacobian matrix has this form. However, what happens when we take the second

derivative of ![]() ? It will yield a third-order tensor, a

? It will yield a third-order tensor, a

![]() linear algebraic quantity. However, this is unnecessarily

complicated, especially for people (like me) who never covered tensors in

their undergraduate education. In this paper, I will try to avoid

differentiation with respect to a vector. Instead, I will do most of the work

on a component-by-component basis, using only differentiation with respect to

a scalar. Using this approach, I will only ever need to use vectors and

scalars, not matrices or higher-order tensors. This makes operations such as

cross-products and dot-products straightforward.

linear algebraic quantity. However, this is unnecessarily

complicated, especially for people (like me) who never covered tensors in

their undergraduate education. In this paper, I will try to avoid

differentiation with respect to a vector. Instead, I will do most of the work

on a component-by-component basis, using only differentiation with respect to

a scalar. Using this approach, I will only ever need to use vectors and

scalars, not matrices or higher-order tensors. This makes operations such as

cross-products and dot-products straightforward.

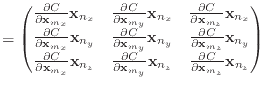

Some of Baraff & Witkin's formulas involve taking a second derivative with respect to two vectors. For these, just remember the layout of the resulting matrix. For example,

|

|